Schema changes have always been risky because a schema isn’t just columns—it’s the interface between data producers and data consumers. Historically, that interface was rigid, which made any change expensive. Modern lakehouse design solves the problem structurally: a Medallion architecture separates where variation is tolerated (Bronze) from where commitment is made (Silver) and relied upon (Gold). In Microsoft Fabric, those roles map cleanly to Lakehouse, Warehouse, and Power BI’s semantic layer, with governance and domain‑oriented (data‑product) design tying it all together. By the end, you’ll see why schema evolution is both inevitable and manageable—and how Fabric builds that manageability into the platform.

Continue reading “Making Schema Change Boring: A Short History—and How Microsoft Fabric’s Medallion Lakehouse Bakes It In”Tag: Data Platform

A Practical Introduction to Star Schema Data Architecture

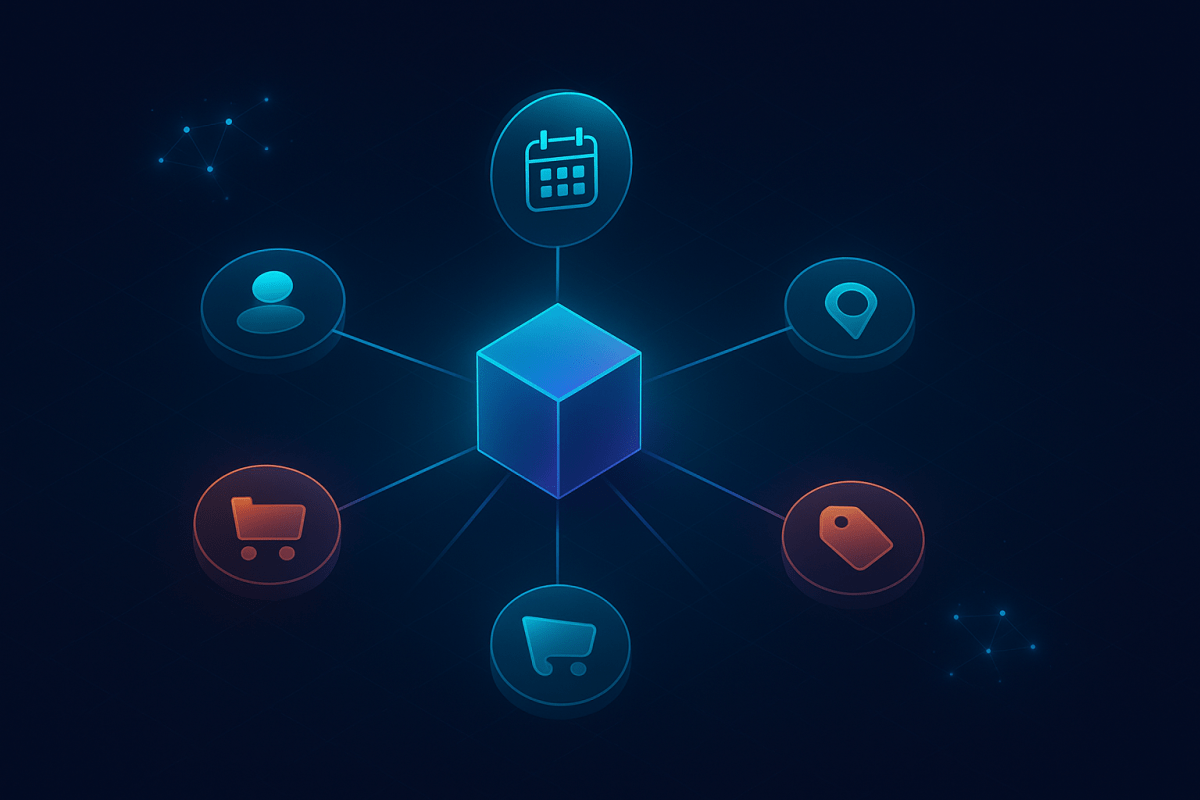

Dimensional modeling remains the most effective way to make analytics fast, understandable, and resilient. The star schema sits at the center of that approach: a simple, denormalized structure where fact tables record measurable events and dimension tables provide descriptive context. In this post, we’ll ground the core ideas, clarify the often‑confused concept of snowflaking (and when it’s worth it), and show how to scale from a single star to a galaxy schema (a.k.a. fact constellation) without losing your footing.

Continue reading “A Practical Introduction to Star Schema Data Architecture”Foundational + Derived Data Products in a Data Mesh

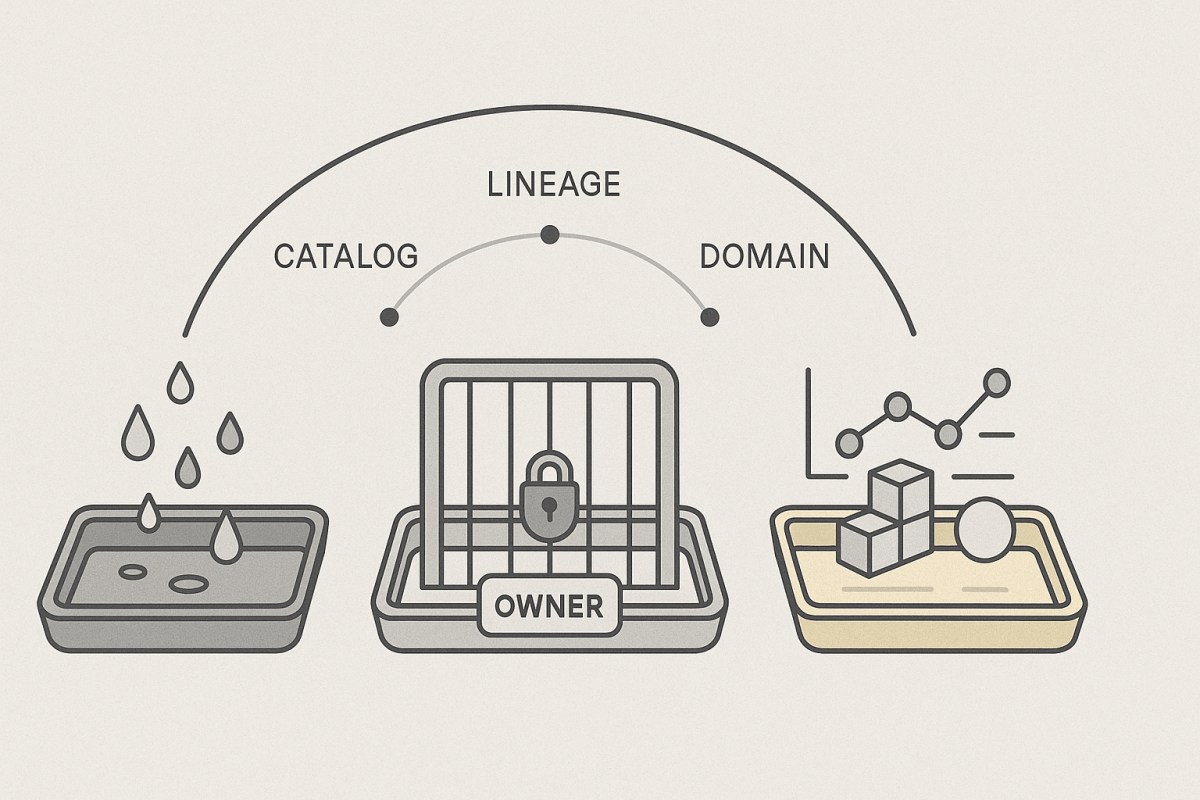

A data mesh is a sociotechnical approach to analytical data that decentralizes responsibility to business domains while standardizing the way data is produced and consumed. It’s grounded in four principles: domain ownership, data as a product, a self‑serve data platform, and federated governance. In practice, it asks each domain team to publish data as a product—discoverable, trustworthy, and operable—while a common platform automates cross‑cutting rules (access, lineage, quality, security).

Zhamak Dehghani frames a data product as an architectural quantum: the smallest independently deployable unit that bundles data, code, metadata, and policy, with a versioned contract and a clear interface (APIs or governed views). Treating both foundational and derived products as quanta is the key to decoupled evolution without breaking interoperability.

Continue reading “Foundational + Derived Data Products in a Data Mesh”“Zero Copy” Doesn’t Mean “No Copies.” It Means “No Unmanaged Copies.”

The rallying cry of modern data platforms—Zero Copy—is revolutionary because it flips the default: don’t move data unless there’s a good reason and the platform manages it for you. In Microsoft Fabric, that starts with in-place access via OneLake Shortcuts and an open storage layer, then selectively uses managed and automated copies (like Mirroring and Materialized Lake Views) when they deliver clear value. The result is less sprawl, more trust, and faster analytics—without hand-built duplication.

Continue reading ““Zero Copy” Doesn’t Mean “No Copies.” It Means “No Unmanaged Copies.””A Lightweight Ingestion Framework in Microsoft Fabric

Modern Fabric estates don’t need a forest of bespoke pipelines, but they do need metadata-driven tools to reduce time to insight. You can land data quickly in Bronze, promote it reliably to Silver and Gold with a metadata‑driven Spark Structured Streaming engine, and treat Gold as the foundation for your data products—semantic models, AI endpoints, and any other served formats.

Continue reading “A Lightweight Ingestion Framework in Microsoft Fabric”Managing Data Platform Projects the Agile Way—and Hitting Your Milestones

One of the things I’ve been thinking about lately a lot is how you formalize the type of project management that is necessary in data platforms, and what you need to do differently compared to software development projects. I brought in a collaborator, one of the best customer success managers I know, to talk about how to do this correctly.

Agile absolutely works for data platform projects, but you need a lightweight way to lock in critical choices without slowing teams down. Architectural Decision Records (ADRs) provide that spine: they capture why you chose a direction, what you rejected, and the consequences—so you can move fast and keep delivery predictable. Combine ADRs with vertical slices, data contracts, quality gates, and observable pipelines, and you can ship in short cycles while meeting real dates.

Continue reading “Managing Data Platform Projects the Agile Way—and Hitting Your Milestones”