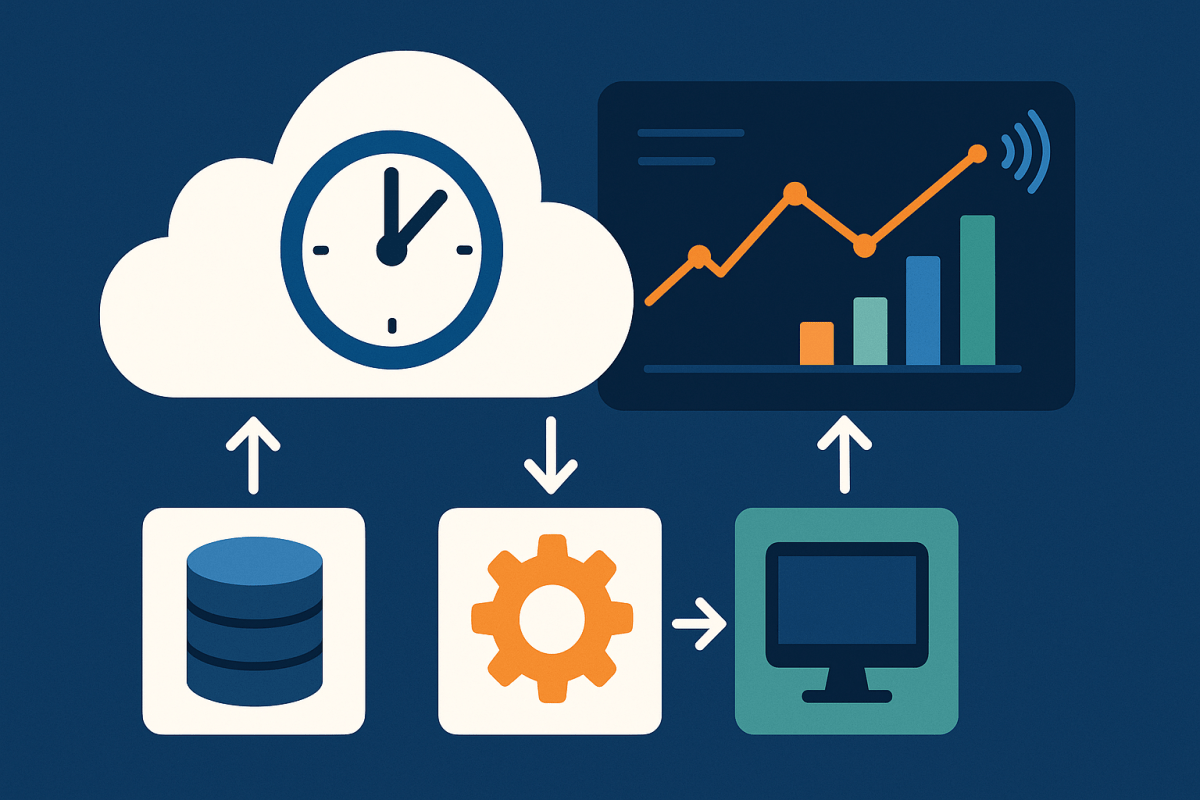

In Microsoft Fabric you’re sitting on top of Delta Lake tables in OneLake. If you flip on Delta Change Data Feed (CDF) for those tables, Delta will record row‑level inserts, deletes, and updates (including pre‑/post‑images for updates) and let you read just the changes between versions. That makes incremental processing for SCDs (Type 1/2) and Data Vault satellites dramatically simpler and cheaper because you aren’t rescanning entire tables—just consuming the “diff.” Fabric’s Lakehouse fully supports this because it’s natively Delta; Mirrored databases land in OneLake as Delta too, but (as of September 2025) Microsoft hasn’t documented a supported way to enable Delta CDF on the mirrored tables themselves; you can still analyze mirrored data with Spark via Lakehouse shortcuts, or source CDC upstream (Real‑Time hub) and write to your own Delta tables with CDF enabled.

This feature is already underutilized, but once Mirrored Databases support the CDF, it’s going to be a must have in every data engineer’s toolkit.

Continue reading “The Microsoft Fabric Delta Change Data Feed (CDF)”