Continuing our hypothetical study of how high school coaches (specifically football coaches) can implement data technologies and use them to help save time and focus on coaching and developing players and students.

You’ve got the Saturday grading workflow dialed in (position tabs, per‑player per‑play grades, simple notes, and a Monday “Team Sheet”). Now let’s zoom out: how do you turn that weekly rhythm into cumulative insight—tracking every kid’s growth across the season (and across seasons), spotting unit‑level trends, and making November decisions with March‑level clarity? The short answer: pair your staff’s grading discipline with a data engineer who stands up a light, durable analytics stack in Microsoft Fabric and Power BI. Fabric gives you one place to land, shape, secure, and publish your data—end‑to‑end—without stitching together a dozen services.

Below is a coach‑first, high‑level guide to “what we’ll do” and “who does what,” building directly on the grading app/process described in the post you just read.

The Coach–Data Engineer Playbook

Coaches (what you already do):

- Grade each snap with the simple rubric (

+ / 0 / – / ME) and one‑phrase notes where they matter. - Tag a handful of teaching reps.

- Keep the grading standards consistent so the data stays trustworthy.

Data Engineer (what they add):

- Wire up a single, durable data lake and semantic model so Saturday’s grades automatically become Monday’s dashboards—and season trends.

- Ensure privacy (players only see their data), reliability (no “did it refresh?” panic), and speed (reports open instantly).

- Set and enforce definitions so “Grade %,” “ME rate,” and “Explosive” mean the same thing in October as they did in Week 1.

The season‑long architecture (in plain language)

Think of this as three layers—Operate → Store/Shape → Show—with governance wrapped around everything.

1) Operate (your grading apps)

- You’re already capturing Players, Games, Plays, On‑Field, Grades in Power Platform (Dataverse). That’s your operational system.

Data engineer’s move: Turn on Link to Microsoft Fabric from Dataverse so your tables are automatically and efficiently mirrored into OneLake (Fabric’s single, organization‑wide data lake) as Delta tables—made for analytics. No export jobs to babysit.

Why this matters: OneLake gives you “one copy” of your data that every analytics engine in Fabric and Power BI can use—faster, cheaper, and simpler than duplicating data all over the place.

2) Store & Shape (the lakehouse)

Inside Fabric, the engineer sets up a Lakehouse with a simple “medallion” flow:

- Bronze: the raw mirrored tables from Dataverse (unchanged).

- Silver: cleaned, typed, coach‑friendly columns (e.g., standardized grade codes, consistent position groups).

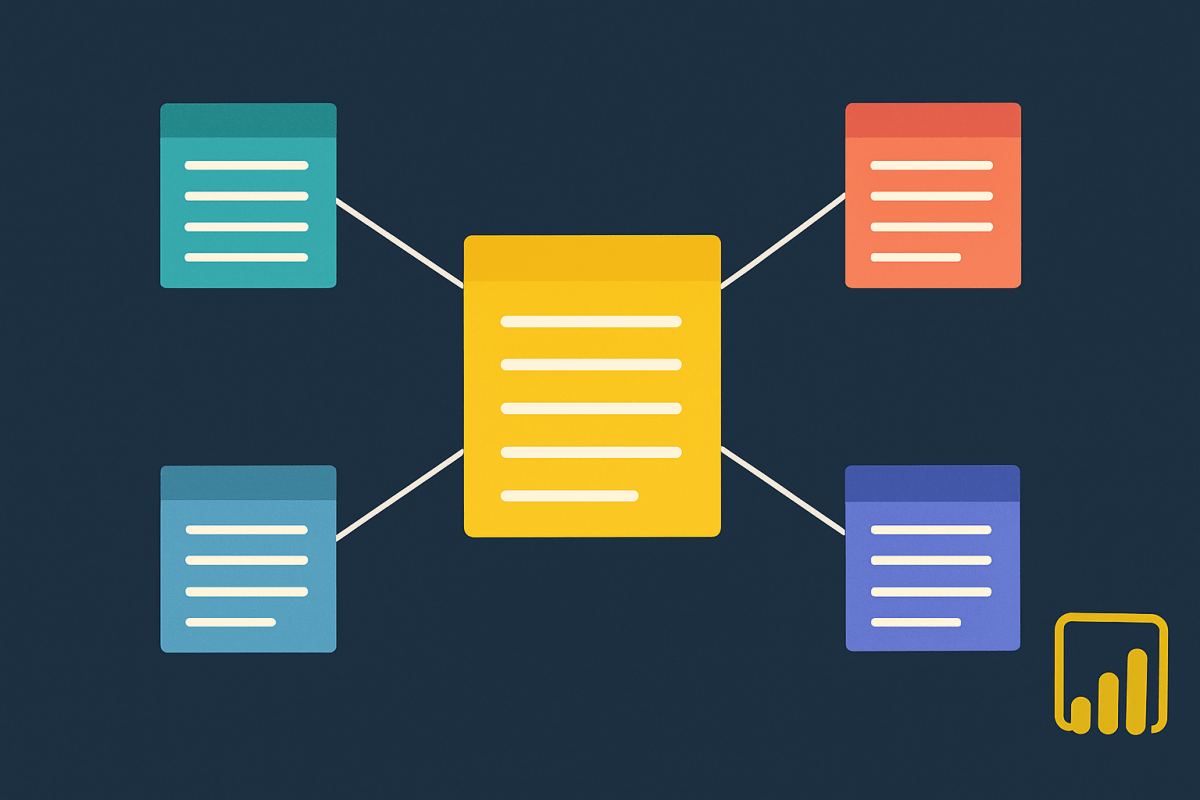

- Gold: a star schema built for analysis:

- Dimensions:

DimPlayer,DimGame,DimOpponent,DimPositionGroup,DimUnit. - Facts:

FactPlayerPlayGrade(one row per player‑play),FactPlayerGame(rollups), and optionalFactEvent(penalties, explosives, pressures).

- Dimensions:

Need to bring in other data (attendance, GPS, a scoreboard CSV, or cloud video marks)? Use OneLake Shortcuts to reference those files in‑place—no brittle copy jobs; still one lake, one security model.

Prefer low‑code data prep? Dataflows Gen2 (Power Query Online) can visually clean and land tables straight into the Lakehouse, which is often perfect for staff‑maintained lookups (e.g., rubric weights, drill catalogs).

3) Show (Power BI with near‑instant reports)

From the Gold tables, the engineer builds a Power BI semantic model using Direct Lake so reports feel “import‑fast” without nightly data copies. Direct Lake reads Delta tables in OneLake straight into memory—so coaches can slice film‑grade data interactively without waiting.

Security: Players get a personal view; coaches see their room; coordinators and the HC see the whole team. That’s row‑level security (RLS) in Power BI: filters that restrict rows per role. (Note: RLS limits apply to Viewer‑level users; workspace Admin/Member roles bypass RLS by design—your engineer will use the right roles.)

Optional live signals: If you want live stats from the sideline tablet or wearables, Fabric’s Real‑Time Intelligence can ingest streams, transform them, and light up a simple “game day board” alongside your scouting notes—then archive those events for later analysis.

Governance everywhere: Fabric’s Purview hub and Domains organize data (e.g., an Athletics domain), apply sensitivity labels, and give leadership a clear view of what’s protected and endorsed. That keeps you aligned with district expectations for student data.

What coaches get (every week and across the season)

- Team Sheet (Monday): The single pager you already use—now backed by a model you can trust for trends and drill‑down.

- Player Cards (private): Grade %, ME rate, touches/targets/havoc plays, consistency streaks, and a short list of “next reps.”

- Room Dashboards:

- OL: pressure/sack accountability, technique flags (“late hands,” “fit late”), situational splits (3rd & medium, low red).

- Skill: route depth consistency, decision quality, yards after contact trends.

- Defense/ST: leverage/fit outcomes, impact plays, coverage/tackle reliability.

- Season Trends: Week‑over‑week ME rate, “win” rate by concept, opponent‑adjusted grades, class‑year cohorts, and offseason baselines that inform summer focus.

All of this stays coach‑friendly because the data engineer bakes the definitions into the semantic model once—and everyone uses the same truth thereafter.

How the partnership runs (no drama)

Your job stays the same: grade the film with the simple rubric and leave one‑phrase notes where they change practice. The apps you’re already using remain the front door.

The engineer’s steady cadence:

- Keep the Dataverse → OneLake link healthy (automatic mirroring).

- Maintain Silver/Gold transforms (Dataflows Gen2 or notebooks) and the star schema.

- Evolve the Power BI model (measures you care about, like “Drive‑ending MEs”).

- Govern access (RLS for players, workspace roles for staff, sensitivity labels in Purview).

- Publish a Power BI app to staff and players so nobody is hunting for links.

A note on licensing (so you can plan)

- Authors/publishers (the engineer and any coach who edits reports) need Power BI Pro.

- Report consumers (read‑only viewers) typically also need Pro, unless your district licenses a Fabric capacity at F64 or higher—in which case Free viewers can access content in workspaces on that capacity. Your engineer can help align the workspace to whatever you have.

Starter scope (what we actually build first)

- Link Dataverse to Fabric (players, games, plays, on‑field, grades mirror into OneLake).

- Gold model with just the essentials:

FactPlayerPlayGrade,DimPlayer,DimGame,DimOpponent,DimPositionGroup. - Power BI semantic model in Direct Lake + three reports:

- Team Sheet (game rollups).

- Room View (filters + technique flags).

- Private Player Card (RLS).

- OneLake Shortcut to any external CSV (e.g., practice attendance) to enrich Player Cards without copying data.

- Purview basics: sensitivity labels + endorsement so admins know what’s trusted.

From there, add opponent scouting cuts, practice‑plan exports, or real‑time sideline boards as you see value.

Guardrails for student data (plain talk)

- Least privilege: players = Viewer + RLS; position coaches see only their room; coordinators/HC get team‑wide. (Admins and Members in a workspace bypass RLS—so keep those roles tight.)

- Governance: put your items in an Athletics domain; apply sensitivity labels; monitor with Purview hub. Your district data lead will appreciate the visibility.

Why Fabric + Power BI works for high school programs

- One system of record from your grading apps to your dashboards—no wobbly exports.

- Fast, coach‑friendly reports via Direct Lake (open, filter, decide).

- Zero‑copy expansion with OneLake Shortcuts when you want to fold in other files/sources.

- Built‑in governance your district already understands (Purview + domains, RLS).

If you want this as an “Athletics Analytics” blueprint, the ingredients above are all you need. Your staff keeps doing what works on Saturday; the data engineer quietly turns that into compounding advantage by Monday—and keeps the season’s trail of evidence organized for next year’s goals.