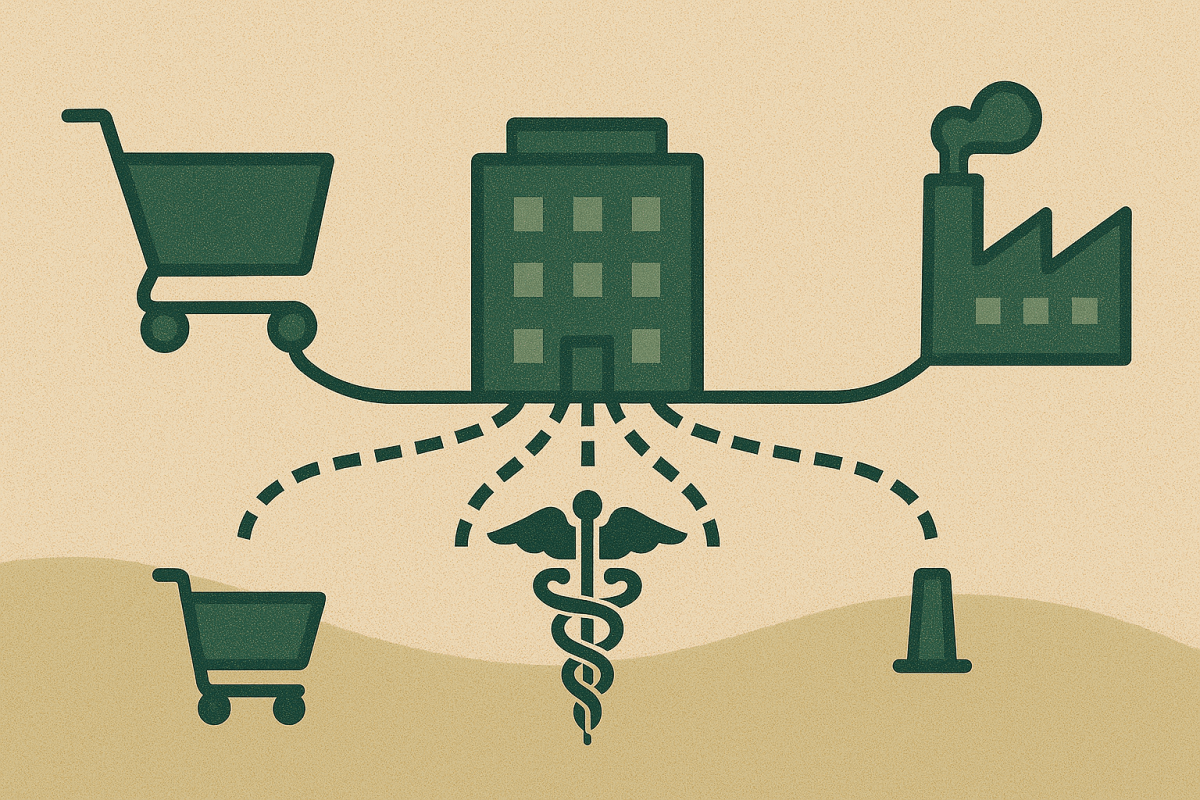

Every organization has white space: important work that lives between teams and across systems, is almost always evidence‑bearing, and—despite its value—rarely reaches the top of the backlog. In software engineering, that’s the unglamorous backbone of quality: keeping documentation and runbooks current, sustaining full test coverage (beyond unit tests), and validating against standards (security, accessibility, SBOM/licensing). In manufacturing, it shows up as traceability and shipment evidence (SPC, PPAP/FAI, calibration certificates) and keeping control plans/PFMEA in sync with engineering changes. In education, it appears as standards alignment of curricula, accessibility/privacy checks across LMS content, and intervention follow‑through after assessments. These jobs cross many systems, require judgment, must leave an audit trail, and are perpetually “important but not urgent”—perfect territory for delegating to digital workers: software teammates that live in the seams, move work to done, and attach the receipts as they go.

“To effectively delegate these tasks they need knowledge, access, and some intangibles.” (Nathan Lasnoski)

A digital worker earns real delegation only when three things are in place: knowledge (trusted sources, rubrics, examples), access (the right tools and permissions under guardrails), and the intangibles of a good teammate (when to act vs. ask, tone, and norms). With that foundation, a coaching worker can also serve as worker‑as‑judge—applying explicit rubrics, pulling evidence across systems, returning a pass/fail or “needs work” with a brief rationale, and providing an easy appeal to a human. The payoff is fast, fair, actionable feedback that feels like a senior reviewer on call 24/7—something frontline teams welcome.

Continue reading “Digital Workers, the White Space, and How to “Hire” One (with the Right Partner)”